Toward intelligent and programmable virtualized NG RANs

By Rahman Doost-Mohammady, Santiago Segarra, and Ashutosh Sabharwal

5G and beyond will facilitate a paradigm shift in wireless networks with respect to its 4G predecessor, transforming many industries and affecting our way of living. One of the key advantages of 5G is its ability to support a much larger number of devices, a higher volume of data traffic, and more stringent end-to-end latency limits compared to previous generations of wireless communication. This will enable a wide range of new applications and services, such as self-driving cars, smart cities, and virtual reality, which all will require low latency and high reliability.

One of the catalysts in achieving the 5G goals will be the use of artificial intelligence (AI) to not only enable these vertical applications but to also optimize and accelerate the 5G network performance itself. AI methods can help to optimize the performance of 5G networks by learning from network performance data and adapting to changing conditions in real-time; these methods are especially effective when the relationship between network parameters and performance is complex and analytically intractable. AI-aided network design could also improve the efficiency of 5G networks by enabling dynamic resource allocation and intelligent scheduling of data transmissions. For example, AI algorithms could analyze traffic patterns, predict future demand, and allocate resources accordingly to maximize the utilization of the network to reduce costs and improve the user experience. See a related blog post here .

To realize the above goals, there is a need for real-world data to train AI models. In particular, it is essential for researchers to have access to a 5G-compliant software-defined system that enables dataset generation at each layer of the network. NVIDIA AerialTM SDK is an excellent solution powered by NVIDIA GPUs and its accelerated networking architecture.

In this blog post, we will overview a few breakthrough research directions that the NVIDIA Aerial platform could enable. In all these directions, the platform provides the following capabilities, which help researchers pursue:

- Representative datasets

- End-to-end over-the-air performance benchmarking

- Real-time implementation and performance evaluation of new algorithms

Next, we will describe each research direction that we are pursuing at Rice University.

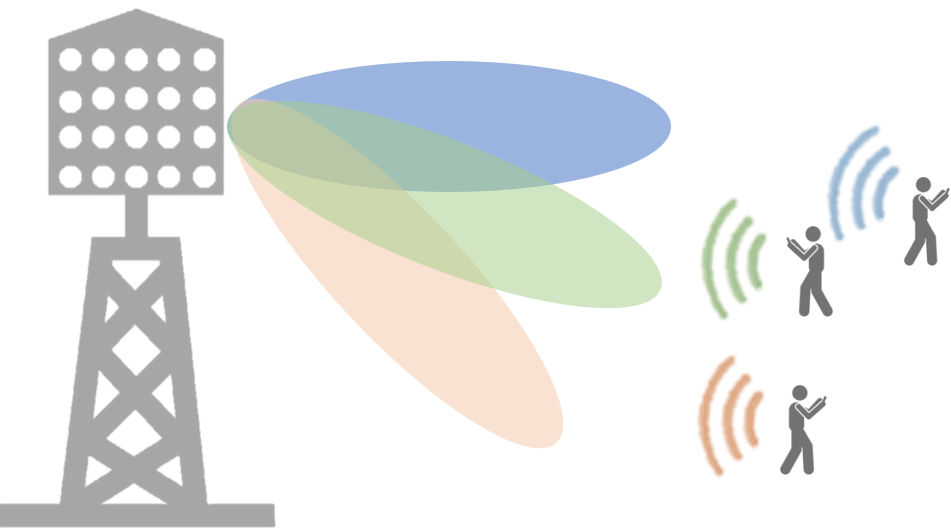

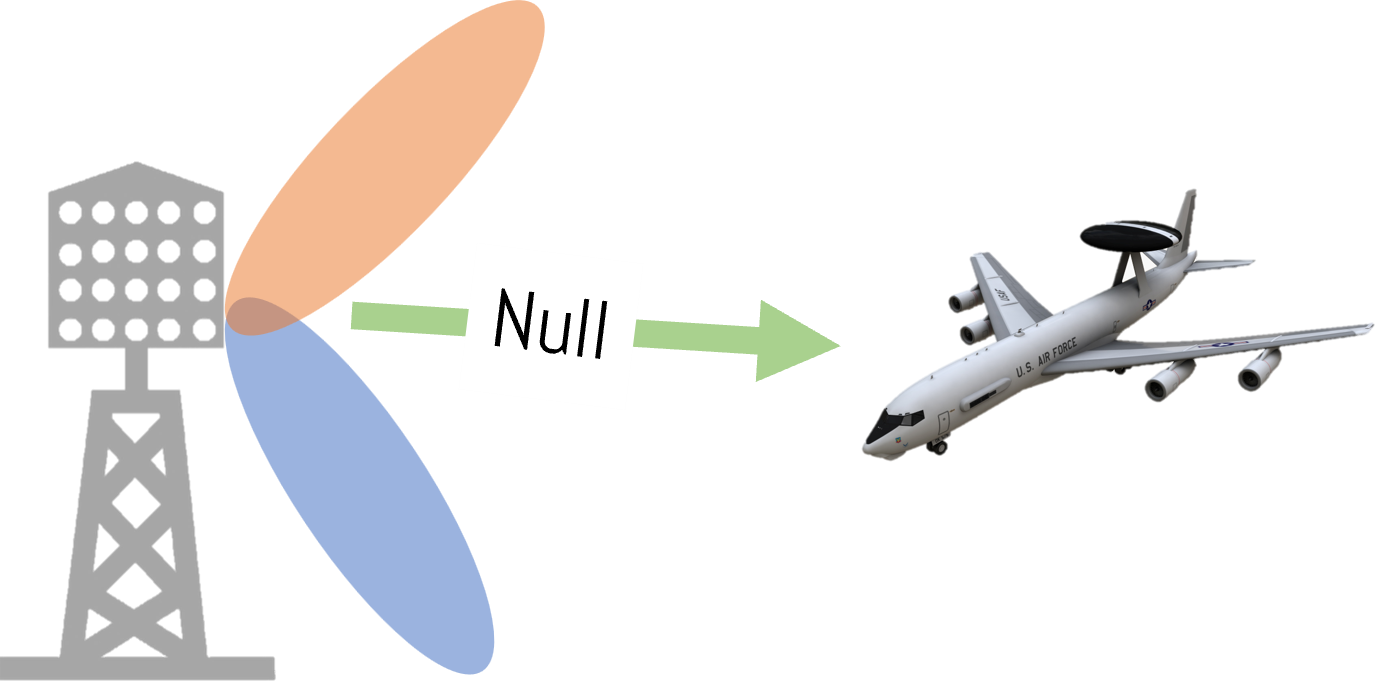

Figure 1. MIMO research at Rice includes neural network-based massive MIMO detection and radar detection using massive MIMO antennas.

Deep learning-based MIMO Detection

MIMO detection is a decades-old topic that is concerned with decoupling multiple spatial streams transmitted by multiple user antennas on the same time-frequency resource. The provably optimal detection algorithm, i.e. the maximum likelihood, and its more efficient variants, such as sphere decoder, have very high computational complexity that increases unfavorably with the number of antennas at the base station and the user terminals as well as the number of symbol options. Their complexity currently prohibits them from being used in real-world systems. Hence, instead, linear but suboptimal algorithms are used in practice, as they have much lower computational complexity. Recently, there has been a surge in the number of research works that are using machine learning models to solve the problem. From deep unrolling [1], to the use of transformers [2], the results have been promising in simulation.

At Rice, we have developed a new method based on Langevin dynamics [3] that has shown to be effective in getting very close to optimal performance. The next step is demonstrating experimental evidence that AI-based methods will deliver these gains in real systems. We are currently exploring the effectiveness of these AI-based detection methods using real-world 5G-compliant data collected from the Aerial platform. Follow-up research will be a real-time implementation of such algorithms (through online training and inference) on the NVIDIA GPUs that are running Aerial.

Radar Detection and Coexistence

The demand for sub-6 GHz frequency spectrum to deploy 5G networks has forced the federal government to rethink the allocation of several bands used for radar applications. This has already resulted in the allocation of the 3.55-3.7 GHz or the CBRS band, for secondary use in the US, with the US Navy radars continuing to be the primary users. Further, the FCC is evaluating the allocation of 3.1-3.55 GHz, home to airborne radars, for secondary use by the 5G operators. While the secondary use in the former band is managed through spectrum databases, the deployment of 5G networks sharing the latter band with airborne radars will be challenging, as it will require stringent interference avoidance criteria. The presence of airborne radars is typically not public and thus, the 5G networks must independently detect their presence through spectrum sensing techniques. While many radar detection schemes have been proposed in the literature, there is a need to look at this problem from the 5G lens. In other words, it is key to design methods that can detect the presence of an airborne radar signal within the 5G frame format.

At Rice, we have designed a radar emulator (using a software-defined radio mounted on a drone) and we are using the NVIDIA Aerial platform to collect the CSI from users polluted by the radar signals with different configurations such as range and speed. Using the CSI datasets, we are investigating the use of AI in detecting radar signal characteristics in CSI data. Future work will include the real-time implementation of such AI-based techniques as an add-on module to the Aerial platform that not only could detect radar but also take interference avoidance measures in an appropriately latency-sensitive manner.

Self-adapting vRANs

The softwarization of RAN enables rethinking the control and optimization of RAN in ways that were impossible with black-boxed hardware. To achieve higher throughput or latency in vRAN software, for example through higher-order spatial multiplexing, more compute resources must be used. This translates to a higher number of cores in CPUs or shifting a higher number of compute kernels to GPUs. The programmability of the NVIDIA Aerial platform allows us to benchmark the wireless performance under varying compute loads.

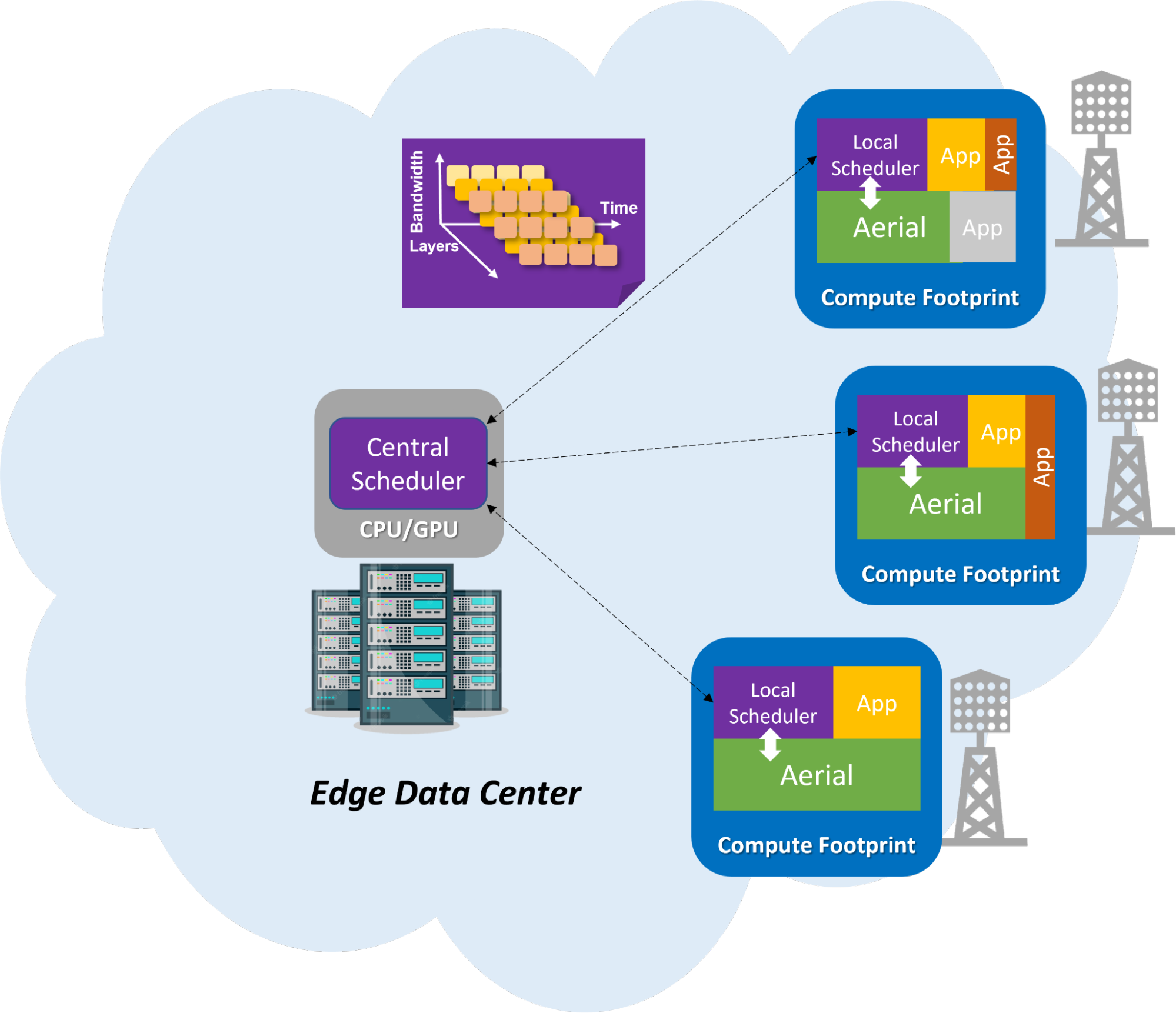

At Rice, we are investigating the amount of GPU resources needed to achieve a certain data rate. Since the achievable data rate in wireless is also dependent on the propagation environment or the wireless channel, it is important to jointly model both effects in the vRAN context. In general, this leads to the design of RAN schedulers that jointly and adaptively allocate compute resources as well as radio resources in the RAN environment. The scheduler considers the available compute resources as well the QoS requirement of the RAN users to allocate radio resources. It can be composed of a local and central module. The local scheduler not only adapts to the varying channel conditions but also to varying compute loads caused by running apps on the same computing environment. In the case of Aerial, latency-sensitive apps, such as the one explained in the previous section, can run on the same GPU. The central scheduler controls multiple local schedulers connected to different vRAN instances. Each scheduler can be designed using deep reinforcement learning (DRL) AI models where the scheduler makes decisions on how to allocate resources based on the compute and channel environments. Our recent work has shown the feasibility and scalability of DRL in massive MIMO user scheduling, where the action space is very large [4]. Figure 2 shows an overview of resource scheduler architecture that enables self-adapting vRANs.

Figure 2. Self-adapting vRANs architecture

Conclusion

GPU-based vRANs, as demonstrated by development and testing on NVIDIA Aerial, provide an exciting opportunity for researchers as they enable demonstrating the capabilities of AI in improving the performance of next-generation cellular networks. At Rice, we will continue to work on enhancing the robustness of next-generation wireless network architectures through the demonstration of the research idea on the NVIDIA Aerial platform.

Authors

Rahman Doost-Mohammady is an assistant research professor in the Department of Electrical and Computer Engineering at Rice University. His work focuses on the design and efficient implementation of next-generation wireless network systems. He is the technical lead for the Rice RENEW project. He received his Ph.D. at Northeastern University in 2014, M.S. at Delft University of Technology in 2009, and B.Sc. at Sharif University of Technology, in 2007.

Santiago Segarra is an associate professor in the Department of Electrical and Computer Engineering at Rice University. His research interests include network theory, data analysis, machine learning, and graph signal processing. He received the B.Sc. degree in Industrial Engineering with highest honors (Valedictorian) from the Instituto Tecnológico de Buenos Aires (ITBA), Argentina, in 2011, the M.Sc. in Electrical Engineering from the University of Pennsylvania (Penn), Philadelphia, in 2014 and the Ph.D. degree in Electrical and Systems Engineering from Penn in 2016.

Ashutosh Sabharwal is a professor and chair in the Department of Electrical and Computer Engineering at Rice University. He works in two independent areas: wireless networks and digital health. His recent work in wireless focuses on joint wireless communications and imaging and large-scale experimental platforms; he leads the Rice RENEW project. He received his Ph.D. and MS at Ohio State University in 1999 and 1995, respectively, and B.Tech. at the Indian Institute of Technology, New Delhi, in 1993.

References

[1] M. Khani, M. Alizadeh, J. Hoydis and P. Fleming, "Adaptive Neural Signal Detection for Massive MIMO," in IEEE Transactions on Wireless Communications, vol. 19, no. 8, pp. 5635-5648, Aug. 2020, doi: 10.1109/TWC.2020.2996144.

[2] K. Pratik, B. D. Rao and M. Welling, "RE-MIMO: Recurrent and Permutation Equivariant Neural MIMO Detection," in IEEE Transactions on Signal Processing, vol. 69, pp. 459-473, 2021, doi: 10.1109/TSP.2020.3045199.

[3] N. Zilberstein, C. Dick, R. Doost-Mohammady, A. Sabharwal and S. Segarra, "Annealed Langevin Dynamics for Massive MIMO Detection," in IEEE Transactions on Wireless Communications, 2022, doi: 10.1109/TWC.2022.3221057.

[4] Q. An, S. Segarra, C. Dick, A. Sabharwal, R. Doost-Mohammady, "A Deep Reinforcement Learning-Based Resource Scheduler for Massive MIMO Networks," in arXiv, 2023, doi: 10.48550/arXiv.2303.00958.